Prompt Engineering: Talk to AI So It Actually Listens

Fundamentals and advanced techniques to prompt ChatGPT, Claude, and beyond

Introduction

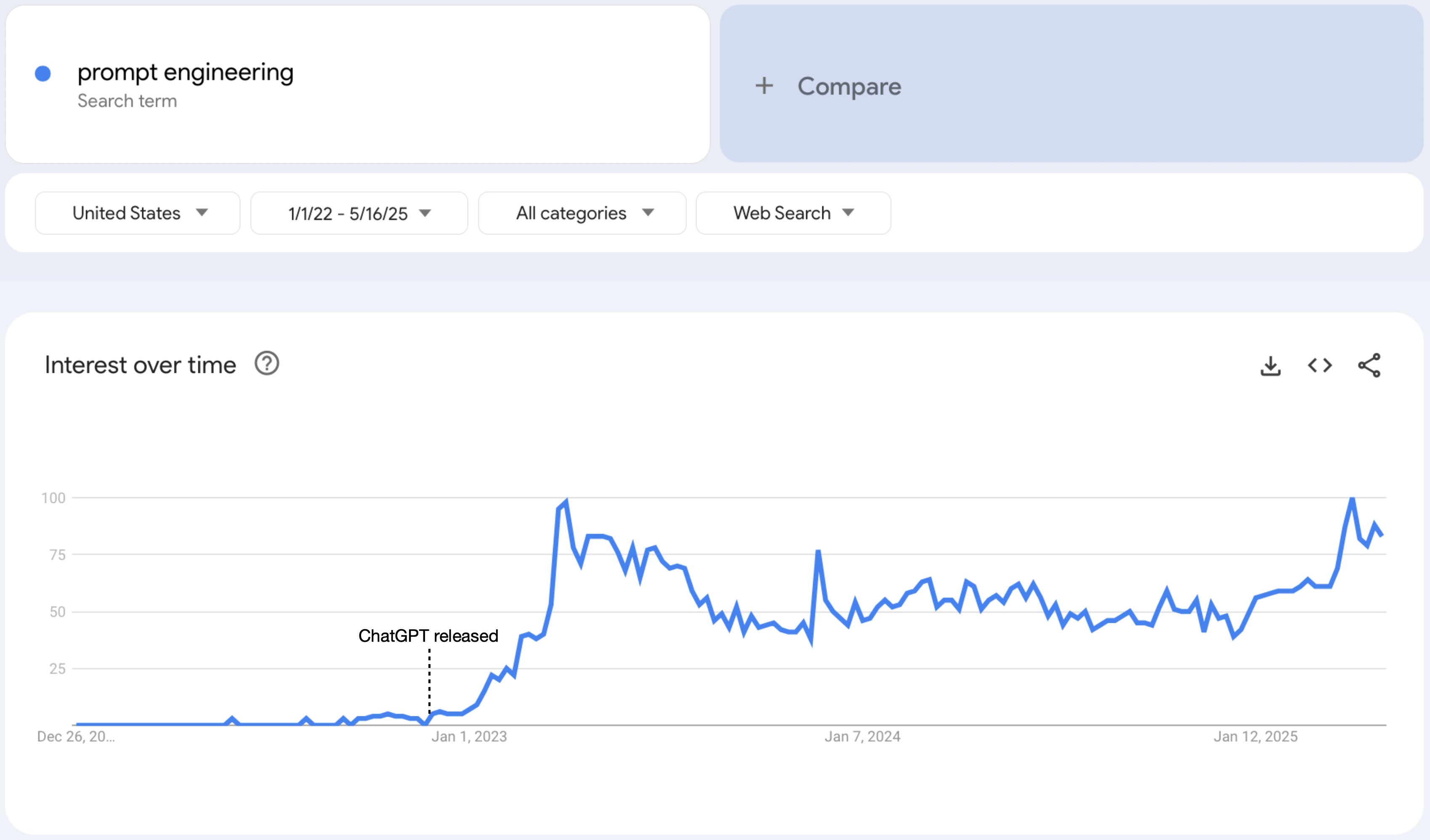

Since OpenAI's ChatGPT hit the mainstream in late 2022, a new skillset quietly became one of the most valuable: prompt engineering. Search interest in this term exploded just months after ChatGPT's debubt. For reference, look at the Google Trends chart below:

Why the hype? Because prompt engineering turns everyday users into power users! The difference between a vague request and a well-crafted prompt can be the difference between a generic paragraph and a usable business strategy, code snippet, or product idea.

Consequently, we decided to explore the following in this article:

- What is Prompt Engineering?

- Why is it important?

- Basic principles to get started

- Advanced techniques to go further

Let’s dive in.

What Is Prompt Engineering?

First, let’s define a prompt: it’s the input you give to an AI model to generate a desired output. For most modern tools like ChatGPT, Claude, or Gemini, prompts are text instructions sent to large language models (LLMs) that produce human-like responses.

Prompt engineering is the art and science of designing those inputs to reliably produce high-quality, relevant results. It’s part instruction writing, part UX design, and part reverse psychology.

As Andrej Karpathy, co-founder at OpenAI, famously put it: “The hottest new programming language is English.”

Why Is Prompt Engineering Important?

✅ 1. Better Output = Better Value

The old saying applies: Garbage in, garbage out. AI models are incredibly powerful if you can speak their language well. A clear, thoughtful prompt increases your odds of getting a useful response the first time.

💸 2. Lower Cost in Production

Every extra token (word) generated by a model costs money. Effective prompts can:

- Avoid unnecessary verbosity

- Focus the model on what’s needed

- Reduce back-and-forth refinement cycles. This adds up over time, especially at scale.

⚙️ 3. More Control in Complex Workflows

From customer support to legal summaries, a solid prompt gives you more predictability and reliability. These are critical for production use cases.

Basic Principles of Prompt Engineering

🎯 1. Write Like a Manager

Think of prompt writing like giving instructions to a new team member. A good manager doesn’t say, “Do something useful.” Instead, they clarify the goal, outline the format, and explain who it’s for. The same applies to prompting AI: the best prompts leave no room for interpretation.

Include:

- Goal: What exactly do you want it to do?

- Format: Bullet points? Table? JSON?

- Context: Where is this being used? Why does it matter?

- Audience: Who will consume the result?

Example:

“Summarize the following article in 3 bullet points for a busy CEO of a pharmaceutical company looking to expand into the EMEA region. Use concise, business-friendly language. Each bullet must be no more than 3 sentences.”

⚓ 2. Re-anchor Your Objective at the End

LLMs often weigh the end of the prompt more heavily. This behavior is known as recency bias. Reinforcing your goal at the end of the prompt helps the model stay aligned with the task.

Example:

“Remember: your task is to keep the summary focused, concise, and actionable for an executive reader.”

🔗 3. Prompt Chaining

If a task has multiple steps, don’t cram them into one giant prompt. Prompt chaining breaks the process into smaller, logical steps that allow the model complete one before moving to the next. Each output feeds into the next which improves accuracy and yields better results for multi-step reasoning.

Example:

Prompt 1: “Summarize the key challenges facing pharmaceutical expansion into EMEA from this article.”

Prompt 2: “Based on those challenges, list 3 questions a CEO might ask before investing.”

Prompt 2: “Generate a 1-slide outline answering those questions in bullet form.”

Advanced Techniques in Prompt Engineering

Once you’ve mastered the basics, you can start using more advanced prompting methods. These techniques help you unlock more nuanced, reliable, and controllable outputs.

🧠 1. Few-Shot Prompting: Show, Don’t Just Tell

Rather than just telling the model what to do, you can show it through examples. This is called few-shot prompting, and it works by giving the model a pattern to mimic before asking it to generate its own response. This approach is especially useful when the task is niche e.g. sentiment classification or text-to-SQL generation.

Example:

You are a sentiment classifier for customer reviews of a clothing store. Your options are only Positive, Negative, Neutral, or Unclear. You must only reply with the sentiment you chose. Examples below:

"The delivery was incredibly fast!" → Positive

"The shirt shrunk after one wash." → Negative

"Customer service was okay, nothing special." → Neutral

"1+1=2" → Unclear

The next review is: "The item arrived damaged."

🔍 2. Chain-of-Thought Prompting: Guide the Reasoning

Chain-of-thought (CoT) prompting asks the model to explain its reasoning before giving a final answer. This technique adds explainability and is especially useful for logic, math, or slightly more complex tasks that benefit from “thinking out loud.”

Example:

Prompt: If a train leaves the station at 3:00 p.m. and travels 60 miles per hour, how far will it have gone by 5:30 p.m.? Let’s think step by step, thinking about time taken, then using that to calculate distance.

ChatGPT (CoT response):

From 3:00 p.m. to 5:30 p.m. is 2.5 hours.

At 60 miles per hour, 60 × 2.5 = 150 miles.

Answer: 150 miles

⚙️ 3. ReAct: Reason + Act for Multi-Step Tasks

The ReAct (Reasoning + Acting) framework is designed for complex environments where the model needs to reason through a problem and take specific actions like querying a database, looking something up, or deciding between next steps.

ReAct is especially common in agentic applications, where the model works with tools or APIs, but it can be simulated in plain prompts too. In production tools, this format can help manage how the model interacts with multiple steps, sources, or decision branches.

Example:

I’m looking for a gift idea under $50 for someone who loves hiking. Think through the possibilities first, then give a specific suggestion.

Response:

Thought: People who enjoy hiking might appreciate something practical. Some common gear includes hydration packs, portable water filters, or trail snacks.

Action: Suggest a product that fits the budget and is well-reviewed.

Final Suggestion: Consider the LifeStraw personal water filter ($19.95) — compact, practical, and ideal for outdoor use.

Conclusion

Prompt engineering isn’t just a technical skill. It’s becoming a form of digital literacy. Whether you're building LLM-driven applications, reviewing legal documents, or exploring creative ideas, the way you speak to an LLM shapes what it gives back.

As AI becomes more embedded in daily workflows, mastering prompts will be a defining advantage not only for developers, but for marketers, analysts, strategists, and creators of all kinds.

The good news? You don’t need to learn a new programming language.

You just need to write more intentionally in the one you already know.

Boost your competitive abilities today.

Ready for the next step? We are too! Contact us and let's make magic together.